Fall 2013 Assignment

Bachelor of Science in Information Technology (BSc

IT) – Semester 5

BT8901 – Object Oriented Systems – 4 Credits

(Book ID: B1185)

Assignment Set (60 Marks)

1.

Write a note on

Principles of Object Oriented Systems.

Ans.- The object model comes with a lot of

terminology. A Smalltalk programmer uses methods, a C++ programmer uses virtual

member functions, and a CLOS programmer uses generic functions. An Object

Pascal programmer talks of a type correct, an Ada programmer calls the same

thing a type conversion. To minimize the confusion, let‟s see what object

orientation is.

Bhaskar

has observed that the phrase object-oriented “has been bandied about with

carefree abandon with much the same reverence accorded „motherhood,‟ „apple

pie,‟ and „structured programming‟”. We can agree that the concept of an object

is central to anything object-oriented. Stefik and Bobrow define objects as

“entities that combine the properties of procedures and data since they perform

computations and save local state”. Defining objects as entities asks the

question somewhat, but the basic concept here is that objects serve to unify

the ideas of algorithmic and data abstraction. Jones further clarifies this

term by noting that “in the object model, emphasis is placed on crisply

characterizing the components of the physical or abstract system to be modeled

by a programmer system…. Objects have a certain „integrity‟ which should not –

in fact, cannot – be violated. An object can only change state, behave, be

manipulated, or stand in relation to other objects in ways appropriate to that

object. An object is characterized by its properties and behavior.

Object-Oriented

Programming:- Object-oriented

programming is a method of implementation in which programs are organized as

cooperative collections of objects, each of which represents an instance of

some class, and whose classes are all members of a hierarchy of classes united

via inheritance relationships.

There are three important parts to this

definition: object-oriented programming (1) uses objects, not algorithms, as

its fundamental logical building blocks (2) each object is an instance of some

class, and (3) classes are related to one another via inheritance

relationships.

Object-Oriented

Design:- Generally,

the design methods emphasize the proper and effective structuring of a complex

system. Let‟s see the explanation for object oriented design.

Object-oriented

design is a method of design encompassing the process of object-oriented

decomposition and a notation for depicting both logical and physical as well as

static and dynamic models of the system under design.

There are two important parts to this

definition: object-oriented design (1) leads to an object-oriented

decomposition and (2) uses different notations to express different models of

the logical (class and object structure) and physical (module and process

architecture) design of a system, in addition to the static and dynamic aspects

of the system.

Object-Oriented

Analysis:- Object-oriented

analysis (or OOA, as it is sometimes called) emphasizes the building of

real-world models, using an object-oriented view of the world. Object-oriented

analysis is a method of analysis that examines requirements from the

perspective of the classes and objects found in the vocabulary of the problem

domain.

2.

What are

objects? Explain characteristics of objects.

Ans.- The term object was first formally

utilized in the Simula language. The term object means a combination of data

and logic that represents some real world entity.

When

developing an object-oriented application, two basic questions always rise:

What

objects does the application need?

What

functionality should those objects have?

Programming

in an object-oriented system consists of adding new kinds of objects to the

system and defining how they behave.

The

different characteristics of the objects are:

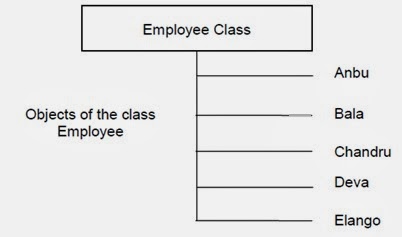

i) Objects are

grouped in classes:-

A class is a set of objects that share a common structure and a common

behavior, a single object is simply an instance of a class. A class is a

specification of structure (instance variables), behavior (methods), and

inheritance for objects.

Anbu,

Bala, Chandru, Deva, and Elango are instances or objects of the class Employee

Attributes: Object state and properties

Properties represent the state of an

object. For example, in a car object, the manufacturer could be denoted by

a name, a reference to a manufacturer object, or a corporate tax identification

number. In general, object’s abstract state can be independent of its physical

representation.

The attributes

of a car object

ii) Objects have

attributes and methods:- A method is a function or procedure that is defined

for a class and typically can access the internal state of an object of that

class to perform some operation. Behavior denotes the collection of methods

that abstractly describes what an object is capable of doing. Each procedure defines

and describes a particular behavior of the object. The object, called the

receiver, is that on which the method operates. Methods encapsulate the

behavior of the object. They provide interfaces to the object, and hide any of

the internal structures and states maintained by the object.

iii) Objects

respond to messages:-

Objects perform operations in response to messages. The message is the

instruction and the method is the implementation. An object or an instance of a

class understands messages. A message has a name, just like method, such as

cost, set cost, cooking time. An object understands a message when it can match

the message to a method that has a same name as the message. To match up the

message, an object first searches the methods defined by its class. If it is

found, that method is called up. If not found, the object searches the

superclass of its class. If it is found in a superclass, then that method is

called up. Otherwise, it continues the search upward. An error occurs only if

none of the superclasses contain the method.

Different

objects can respond to the same message in different ways. In this way a

message is different from a subroutine call. This is known as polymorphism, and

this gives a great deal of flexibility. A message differs from a function in

that a function says how to do something and a message says what to do.

Example: draw is a message given to different objects.

Objects respond

to messages according to methods defined in its class.

3.

What are

behavioral things in UML mode? Explain two kinds of behavioral things.

Ans.- Behavioral things are the dynamic

parts of UML models. These are the verbs of a model, representing behavior

over time and space. In all, there are two primary kinds of behavioral things.

1.

Interaction

2.

State Machine

Interaction: An interaction is a behavior that

comprises a set of messages exchanged among a set of objects within a

particular context to accomplish a specific purpose. The behavior of a society

of objects or of an individual operation may be specified with an interaction.

An interaction involves a number of other elements, including messages, action

sequences (the behavior invoked by a message), and links (the connection

between objects). Graphically, an interaction (message) is rendered as a

directed line, almost always including the name of its operation, as in below Figure.

Interaction

(message)

State Machine: A state machine is a behavior

that specifies the sequences of states an object or an interaction that goes

through during its lifetime in response to events, together with its responses

to those events. The behavior of an individual class or a collaboration of

classes may be specified with a state machine. A state machine involves a

number of other elements, including states, transitions (the change from one

state to another state), events (things that trigger a transition), and

activities (the response to a transition). Graphically, a state is rendered as

a rounded rectangle, usually including its name and its sub states, if any, as

in below Figure.

State

4.

Write a short

note on Class-Responsibility-Collaboration (CRC) Cards.

Ans.- A Class Responsibility

Collaborator (CRC) model (Beck

& Cunningham 1989; Wilkinson 1995; Ambler 1995)

is a collection of standard index cards that have been divided into three

sections, as depicted in Figure

1. A class represents a collection of similar objects, a

responsibility is something that a class knows or does, and a collaborator is

another class that a class interacts with to fulfill its

responsibilities. Figure

2 presents

an example of two hand-drawn CRC cards.

Figure 1. CRC Card Layout.

Figure 2. Hand-drawn CRC Cards.

Although

CRC cards were originally introduced as a technique for teaching

object-oriented concepts, they have also been successfully used as a

full-fledged modeling technique. My experience is that CRC models are an

incredibly effective tool for conceptual modeling as well as for detailed

design. CRC cards feature prominently in eXtreme Programming (XP) (Beck

2000) as a design technique. My

focus here is on applying CRC cards for conceptual modeling with your

stakeholders.

A

class represents a collection of similar objects. An object is a person, place,

thing, event, or concept that is relevant to the system at hand. For example,

in a university system, classes would represent students, tenured professors,

and seminars. The name of the class appears across the top of a CRC card and is

typically a singular noun or singular noun phrase, such as Student, Professor,

and Seminar. You use singular names because each class represents a

generalized version of a singular object. Although there may be the student

John O’Brien, you would model the class Student. The information

about a student describes a single person, not a group of people. Therefore, it

makes sense to use the name Student and not Students.

Class names should also be simple. For example, which name is better: Student or Person

who takes seminars?

A

responsibility is anything that a class knows or does. For example, students

have names, addresses, and phone numbers. These are the things a student knows.

Students also enroll in seminars, drop seminars, and request transcripts. These

are the things a student does. The things a class knows and does constitute its

responsibilities. Important: A class is able to change the values of the things

it knows, but it is unable to change the values of what other classes know.

Sometimes

a class has a responsibility to fulfill, but not have enough information to do

it. For example, as you see in Figure

3 students enroll in seminars.

To do this, a student needs to know if a spot is available in the seminar and,

if so, he then needs to be added to the seminar. However, students only have

information about themselves (their names and so forth), and not about

seminars. What the student needs to do is collaborate/interact with the card

labeled Seminar to sign up for a seminar. Therefore, Seminar is

included in the list of collaborators of Student.

Figure 3. Student CRC card.

Collaboration

takes one of two forms: A request for information or a request to do something.

For example, the card Student requests an indication from the

card Seminar whether a space is available, a request for

information.Student then requests to be added to the Seminar,

a request to do something. Another way to perform this logic, however, would

have been to have Student simply request Seminar to

enroll himself into itself. Then have Seminardo the work of

determining if a seat is available and, if so, then enrolling the student and,

if not, then informing the student that he was not enrolled.

5.

Explain Modern

Hierarchical Teams. Also draw its structure.

Ans.- As just mentioned, the problem with

traditional programmer teams is that it is all but impossible to find one

individual who is both a highly skilled programmer and a successful manager.

The solution is to use a matrix organizational structure and to replace the

chief programmer by two individuals: a team leader, who is in charge of the

technical aspects of the team‟s activities, and a team manager, who is

responsible for all non-technical managerial decisions. The structure of the resulting

team is shown in below figure.

Figure:-The

Structure of a Modern Hierarchical Programming Team

It is important to realize that this

organizational structure does not violate the fundamental managerial principle

that no employee should report to more than one manager. The areas of

responsibility are clearly delineated. The team leader is responsible for only

technical management. Thus, budgetary and legal issues are not handled by the

team leader, nor are performance appraisals. On the other hand, the team leader

has sole responsibility on technical issues. The team manager, therefore, has

no right to promise, say, that the information system will be delivered within

four weeks; promises of that sort have to be made by the team leader.

Before implementation begins, it is

important to demarcate clearly those areas that appear to be the responsibility

of both the team manager and the team leader. For example, consider the issue

of annual leave. The situation can arise that the team manager approves a leave

application because leave is a non-technical issue, only to find the

application vetoed by the team leader because a deadline is approaching. The

solution to this and related issues is for higher management to draw up a

policy regarding those areas that both the team manager and the team leader

consider to be their responsibility.

6.

Explain in brief

the five levels of CMM.

Ans.- A maturity level is a well-defined

evolutionary plateau toward achieving a mature software process. Each maturity

level provides a layer in the foundation for continuous process improvement.

In

CMMI models with a staged representation, there are five maturity levels

designated by the numbers 1 through 5

1.

Initial

2.

Managed

3.

Defined

4.

Quantitatively

Managed

5.

Optimizing

CMMI Staged Represenation- Maturity Levels

Maturity Level 1

- Initial

At

maturity level 1, processes are usually ad hoc and chaotic. The organization

usually does not provide a stable environment. Success in these organizations

depends on the competence and heroics of the people in the organization and not

on the use of proven processes.

Maturity

level 1 organizations often produce products and services that work; however,

they frequently exceed the budget and schedule of their projects.

Maturity

level 1 organizations are characterized by a tendency to over commit, abandon

processes in the time of crisis, and not be able to repeat their past

successes.

Maturity Level 2

- Managed

At

maturity level 2, an organization has achieved all the specific and generic

goals of the maturity level 2 process areas. In other words, the

projects of the organization have ensured that requirements are managed and

that processes are planned, performed, measured, and controlled.

The

process discipline reflected by maturity level 2 helps to ensure that existing

practices are retained during times of stress. When these practices are in

place, projects are performed and managed according to their documented plans.

At

maturity level 2, requirements, processes, work products, and services are

managed. The status of the work products and the delivery of services are

visible to management at defined points.

Maturity Level 3

- Defined

At

maturity level 3, an organization has achieved all the specific and generic

goals of the process areas assigned to maturity levels 2 and 3.

At

maturity level 3, processes are well characterized and understood, and are

described in standards, procedures, tools, and methods.

A

critical distinction between maturity level 2 and maturity level 3 is the scope

of standards, process descriptions, and procedures. At maturity level 2, the

standards, process descriptions, and procedures may be quite different in each

specific instance of the process (for example, on a particular project). At

maturity level 3, the standards, process descriptions, and procedures for a

project are tailored from the organization's set of standard processes to suit

a particular project or organizational unit. The organization's set of standard

processes includes the processes addressed at maturity level 2 and maturity

level 3. As a result, the processes that are performed across the organization

are consistent except for the differences allowed by the tailoring guidelines.

Maturity Level 4

- Quantitatively Managed

At

maturity level 4, an organization has achieved all the specific

goals of the process areas assigned to maturity levels 2, 3, and 4 and

the generic goals assigned to maturity levels 2 and 3.

At

maturity level 4 Subprocesses are selected that significantly contribute to

overall process performance. These selected subprocesses are controlled using

statistical and other quantitative techniques.

Quantitative

objectives for quality and process performance are established and used as

criteria in managing processes. Quantitative objectives are based on the needs

of the customer, end users, organization, and process implementers. Quality and

process performance are understood in statistical terms and are managed

throughout the life of the processes.

For

these processes, detailed measures of process performance are collected and

statistically analyzed. Special causes of process variation are identified and,

where appropriate, the sources of special causes are corrected to prevent

future occurrences.

Maturity Level 5

- Optimizing

At

maturity level 5, an organization has achieved all the specific goals of

the process areas assigned to maturity levels 2, 3, 4, and 5 and the generic

goals assigned to maturity levels 2 and 3.

Processes

are continually improved based on a quantitative understanding of the common

causes of variation inherent in processes.

Maturity

level 5 focuses on continually improving process performance through both

incremental and innovative technological improvements.

Quantitative

process-improvement objectives for the organization are established,

continually revised to reflect changing business objectives, and used as

criteria in managing process improvement.